Effective SEO (Search Engine Optimization) is paramount for driving organic traffic to websites in the competitive landscape of digital marketing. One of the critical challenges in SEO is understanding the structure and interconnections of website content. This process can be tedious and time-consuming if done manually. However, Python offers powerful tools to automate these tasks, making SEO efforts more efficient and data-driven.

Problem Statement

SEO professionals often face several challenges, including:

- Content Analysis: Identifying content clusters and gaps within a website can be overwhelming, especially for large sites. Manually analyzing content to find opportunities for new topics or improvements is time-consuming.

- Internal Linking: Effective internal linking is crucial for SEO, but visualizing and optimizing these links can be complex without the right tools.

- Efficient Crawling: Fetching and analyzing large amounts of page data is resource-intensive and can be inefficient without automation.

- Actionable Insights: Gaining clear and actionable insights into content structure is essential for informed decision-making, yet this often requires sophisticated analysis techniques.

How Python Can Help

Python provides robust libraries and tools that can automate and simplify these SEO challenges. Let’s delve into how Python can address each problem:

1. Content Analysis

Understanding how content is clustered on a website can help identify gaps and opportunities for new content. Python can automate the extraction and analysis of content from various pages, allowing SEO professionals to:

- Cluster Similar Pages: By using machine learning techniques like TF-IDF and clustering algorithms, Python can group similar pages together.

- Identify Content Gaps: Analyzing clusters helps identify areas where content is lacking or can be expanded.

2. Internal Linking

Effective internal linking strategies can significantly improve a website’s SEO. Python can help by:

- Visualizing Relationships: Creating visual representations of how pages link to each other can highlight areas for optimization.

- Optimizing Link Structure: Understanding the network of internal links allows for strategic improvements to enhance user navigation and SEO performance.

3. Efficient Crawling

Manually fetching and analyzing data from large websites is inefficient. Python automates this process by:

- Automating Data Collection: Using libraries like

requestsandBeautifulSoup, Python can crawl websites and fetch data quickly and efficiently. - Reducing Manual Effort: Automation saves significant time and resources, allowing SEO professionals to focus on strategic tasks.

4. Actionable Insights

Gaining clear insights into content structure is essential for making informed SEO decisions. Python enables this by:

- Generating Visualizations: Tools like

networkxandplotlycan create interactive graphs and visualizations to illustrate content relationships and clusters. - Providing Data-Driven Insights: Visual and analytical insights help in making data-driven decisions to improve SEO strategies.

Python Script Overview

To address these SEO challenges, let’s walk through a Python script step by step. This script automates the process of fetching URLs from sitemaps, filtering relevant URLs, fetching and analyzing page content, clustering URLs based on content similarity, and visualizing the clusters.

Step 1: Fetch URLs from Sitemaps

First, we extract URLs listed in a sitemap. A sitemap is an XML file that lists all the pages of a website.

import requests

from bs4 import BeautifulSoup

def get_urls_from_sitemap(url) -> list:

r = requests.get(url)

soup = BeautifulSoup(r.text, 'xml')

urls = [item.text for item in soup.find_all('loc')]

return urlsStep 2: Filter Relevant URLs

Next, we filter out irrelevant URLs such as pagination or category pages.

def filter_urls(urls):

filtered_urls = [url for url in urls if '/page/' not in url and 'category' not in url]

return filtered_urls

Step 3: Fetch Page Content

We then fetch and process the HTML content of each URL. This involves extracting the main content, title, and heading.

def fetch_page_content(url):

headers = {'User-Agent': 'Mozilla/5.0'}

try:

r = requests.get(url, headers=headers)

if r.status_code != 200:

return None, None, None

html_content = r.text

except Exception as e:

print(f"Error fetching {url}: {e}")

return None, None, None

soup = BeautifulSoup(html_content, 'lxml')

if soup.find('meta', attrs={'name': 'robots', 'content': 'noindex'}):

return None, None, None

title = soup.title.string if soup.title else ''

heading = soup.find(['h1', 'h2', 'h3'])

heading = heading.text.strip() if heading else ''

content = soup.find('main') or soup.find('article') or soup.find('div', {'class': 'main-content'})

if content:

for tag in content(['header', 'nav', 'footer', 'aside']):

tag.decompose()

text_content = ' '.join(content.stripped_strings)

else:

text_content = ''

return title, heading, text_content

Step 4: Calculate Content Similarity

Using TF-IDF (Term Frequency-Inverse Document Frequency), we calculate similarity scores between pages.

from sklearn.feature_extraction.text import TfidfVectorizer

def calculate_similarity(contents):

vectorizer = TfidfVectorizer(stop_words='english')

tfidf_matrix = vectorizer.fit_transform(contents)

return tfidf_matrix, vectorizerStep 5: Cluster URLs

We group similar pages together using Agglomerative Clustering.

from sklearn.cluster import AgglomerativeClustering

def cluster_urls(tfidf_matrix):

clustering_model = AgglomerativeClustering(n_clusters=None, distance_threshold=1.5)

clustering_model.fit(tfidf_matrix.toarray())

return clustering_model.labels_

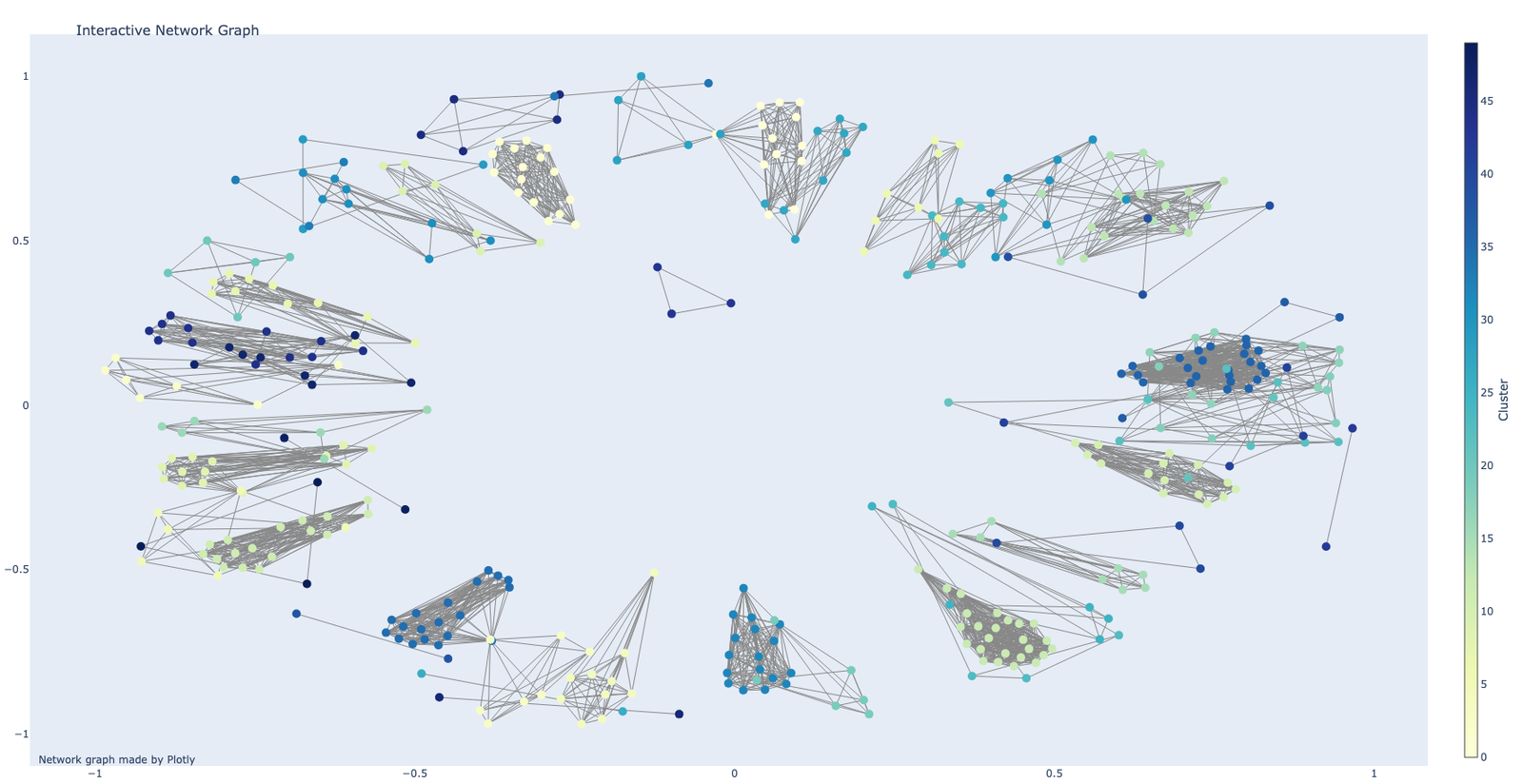

Step 6: Create Visualization

We visualize the clusters using an interactive network graph.

import networkx as nx

import plotly.graph_objs as go

import pandas as pd

def create_visualization(urls, labels, output_file):

df = pd.DataFrame({'url': urls, 'label': labels})

G = nx.Graph()

for label in df['label'].unique():

cluster_urls = df[df['label'] == label]['url']

for url in cluster_urls:

G.add_node(url, group=label)

for i, url1 in enumerate(cluster_urls):

for url2 in cluster_urls[i + 1:]:

G.add_edge(url1, url2)

pos = nx.spring_layout(G, k=0.3)

edge_trace = []

for edge in G.edges():

x0, y0 = pos[edge[0]]

x1, y1 = pos[edge[1]]

trace = go.Scatter(

x=[x0, x1, None],

y=[y0, y1, None],

mode='lines',

line=dict(width=1, color='#888'),

hoverinfo='none'

)

edge_trace.append(trace)

node_trace = go.Scatter(

x=[pos[node][0] for node in G.nodes()],

y=[pos[node][1] for node in G.nodes()],

text=[node for node in G.nodes()],

mode='markers',

hoverinfo='text',

marker=dict(

showscale=True,

colorscale='YlGnBu',

color=[G.nodes[node]['group'] for node in G.nodes()],

size=10,

colorbar=dict(

thickness=15,

title='Cluster',

xanchor='left',

titleside='right'

),

)

)

fig = go.Figure(data=edge_trace + [node_trace],

layout=go.Layout(

title='<br>Interactive Network Graph',

titlefont=dict(size=16),

showlegend=False,

hovermode='closest',

margin=dict(b=20, l=5, r=5, t=40),

annotations=[dict(

text="Network graph made by Plotly",

showarrow=False,

xref="paper", yref="paper",

x=0.005, y=-0.002

)],

xaxis=dict(showgrid=False, zeroline=False),

yaxis=dict(showgrid=False, zeroline=False))

)

fig.write_html(output_file)

return df

Step 7: Save and Load Data

Finally, we manage data persistence to save and load the processed data for future use.

import json

import os

def save_data(valid_urls, page_contents, similarity_scores, related_urls):

data = {

'valid_urls': valid_urls,

'page_contents': page_contents,

'similarity_scores': similarity_scores,

'related_urls': related_urls

}

with open('data.json', 'w') as f:

json.dump(data, f)

def load_data():

if os.path.exists('data.json'):

with open('data.json', 'r') as f:

data = json.load(f)

return data['valid_urls'], data['page_contents'], data['similarity_scores'], data['related_urls']

else:

return [], [], [], []Running the Script

To execute the script, ensure all required libraries are installed and run the script with the following command:

pip install requests beautifulsoup4 pandas scikit-learn networkx plotly tqdmComplete script:

import requests

from bs4 import BeautifulSoup

import pandas as pd

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.cluster import AgglomerativeClustering

import networkx as nx

import plotly.graph_objs as go

from tqdm import tqdm

import json

import os

# Step 1: Fetch URLs from Sitemaps

def get_urls_from_sitemap(url) -> list:

r = requests.get(url)

soup = BeautifulSoup(r.text, 'xml')

urls = [item.text for item in soup.find_all('loc')]

return urls

# Step 2: Filter Relevant URLs

def filter_urls(urls):

filtered_urls = [url for url in urls if '/page/' not in url and 'category' not in url]

return filtered_urls

# Step 3: Fetch Page Content

def fetch_page_content(url):

headers = {'User-Agent': 'Mozilla/5.0'}

try:

r = requests.get(url, headers=headers)

if r.status_code != 200:

return None, None, None

html_content = r.text

except Exception as e:

print(f"Error fetching {url}: {e}")

return None, None, None

soup = BeautifulSoup(html_content, 'lxml')

if soup.find('meta', attrs={'name': 'robots', 'content': 'noindex'}):

return None, None, None

title = soup.title.string if soup.title else ''

heading = soup.find(['h1', 'h2', 'h3'])

heading = heading.text.strip() if heading else ''

content = soup.find('main') or soup.find('article') or soup.find('div', {'class': 'main-content'})

if content:

for tag in content(['header', 'nav', 'footer', 'aside']):

tag.decompose()

text_content = ' '.join(content.stripped_strings)

else:

text_content = ''

return title, heading, text_content

# Step 4: Calculate Content Similarity

def calculate_similarity(contents):

vectorizer = TfidfVectorizer(stop_words='english')

tfidf_matrix = vectorizer.fit_transform(contents)

return tfidf_matrix, vectorizer

# Step 5: Cluster URLs

def cluster_urls(tfidf_matrix):

clustering_model = AgglomerativeClustering(n_clusters=None, distance_threshold=1.5)

clustering_model.fit(tfidf_matrix.toarray())

return clustering_model.labels_

# Step 6: Create Visualization

def create_visualization(urls, labels, output_file):

df = pd.DataFrame({'url': urls, 'label': labels})

G = nx.Graph()

for label in df['label'].unique():

cluster_urls = df[df['label'] == label]['url']

for url in cluster_urls:

G.add_node(url, group=label)

for i, url1 in enumerate(cluster_urls):

for url2 in cluster_urls[i + 1:]:

G.add_edge(url1, url2)

pos = nx.spring_layout(G, k=0.3)

edge_trace = []

for edge in G.edges():

x0, y0 = pos[edge[0]]

x1, y1 = pos[edge[1]]

trace = go.Scatter(

x=[x0, x1, None],

y=[y0, y1, None],

mode='lines',

line=dict(width=1, color='#888'),

hoverinfo='none'

)

edge_trace.append(trace)

node_trace = go.Scatter(

x=[pos[node][0] for node in G.nodes()],

y=[pos[node][1] for node in G.nodes()],

text=[node for node in G.nodes()],

mode='markers',

hoverinfo='text',

marker=dict(

showscale=True,

colorscale='YlGnBu',

color=[G.nodes[node]['group'] for node in G.nodes()],

size=10,

colorbar=dict(

thickness=15,

title='Cluster',

xanchor='left',

titleside='right'

),

)

)

fig = go.Figure(data=edge_trace + [node_trace],

layout=go.Layout(

title='<br>Interactive Network Graph',

titlefont=dict(size=16),

showlegend=False,

hovermode='closest',

margin=dict(b=20, l=5, r=5, t=40),

annotations=[dict(

text="Network graph made by Plotly",

showarrow=False,

xref="paper", yref="paper",

x=0.005, y=-0.002

)],

xaxis=dict(showgrid=False, zeroline=False),

yaxis=dict(showgrid=False, zeroline=False))

)

fig.write_html(output_file)

return df

# Step 7: Save and Load Data

def save_data(valid_urls, page_contents, similarity_scores, related_urls):

data = {

'valid_urls': valid_urls,

'page_contents': page_contents,

'similarity_scores': similarity_scores,

'related_urls': related_urls

}

with open('data.json', 'w') as f:

json.dump(data, f)

def load_data():

if os.path.exists('data.json'):

with open('data.json', 'r') as f:

data = json.load(f)

return data['valid_urls'], data['page_contents'], data['similarity_scores'], data['related_urls']

else:

return [], [], [], []

if __name__ == '__main__':

sitemap_urls = [

'https://www.mygreatlearning.com/blog/post-sitemap.xml',

'https://www.mygreatlearning.com/blog/post-sitemap2.xml',

'https://www.mygreatlearning.com/blog/post-sitemap3.xml'

]

valid_urls, page_contents, similarity_scores, related_urls = load_data()

if not valid_urls or not page_contents:

all_filtered_urls = []

for sitemap_url in tqdm(sitemap_urls, desc="Fetching URLs from sitemaps"):

urls = get_urls_from_sitemap(sitemap_url)

filtered_urls = filter_urls(urls)

all_filtered_urls.extend(filtered_urls)

all_filtered_urls = [url for url in all_filtered_urls if url not in valid_urls]

for url in tqdm(all_filtered_urls, desc="Fetching page contents"):

title, heading, content = fetch_page_content(url)

if content:

page_contents.append(f"{title} {heading} {content}")

valid_urls.append(url)

save_data(valid_urls, page_contents, similarity_scores, related_urls)

if not similarity_scores:

tfidf_matrix, vectorizer = calculate_similarity(page_contents)

similarity_scores = (tfidf_matrix * tfidf_matrix.T).toarray().tolist() # Save as list to be JSON serializable

labels = cluster_urls(tfidf_matrix)

related_urls = pd.DataFrame({'url': valid_urls, 'label': labels}).groupby('label')['url'].apply(list).to_dict()

save_data(valid_urls, page_contents, similarity_scores, related_urls)

else:

tfidf_matrix = pd.DataFrame(similarity_scores).values

vectorizer = TfidfVectorizer(stop_words='english')

labels = cluster_urls(tfidf_matrix)

df = create_visualization(valid_urls, labels, 'network_graph.html')

similar_urls_df = pd.DataFrame.from_dict(related_urls, orient='index').transpose()

similar_urls_df.to_excel('similar_urls.xlsx', index=False)

print(similar_urls_df)Explanation for Beginners

- Importing Libraries: The script begins by importing necessary libraries. These libraries provide functionalities for web scraping, data manipulation, machine learning, graph visualization, and data storage.

- Functions for Each Step:

get_urls_from_sitemap(url): Fetches URLs from a given sitemap URL.filter_urls(urls): Filters out irrelevant URLs.fetch_page_content(url): Fetches and processes the content of a given URL.calculate_similarity(contents): Calculates similarity scores between page contents using TF-IDF.cluster_urls(tfidf_matrix): Clusters URLs based on content similarity.create_visualization(urls, labels, output_file): Creates and saves an interactive network graph of clustered URLs.save_data(valid_urls, page_contents, similarity_scores, related_urls): Saves data to a JSON file.load_data(): Loads data from a JSON file if it exists.

- Main Script Execution:

- Defines the sitemap URLs to fetch.

- Loads existing data if available.

- If data is not available, fetches URLs and page contents, and saves them.

- Calculates similarity scores and clusters URLs if not already done.

- Creates a visualization of the clustered URLs.

- Saves a DataFrame of related URLs to an Excel file and prints it.

Output: While Running the script

Script Flow

- Load Data: Check if there’s existing data to load, which speeds up the process if it’s been run before.

- Fetch URLs and Content: If no existing data, fetch URLs from sitemaps and then fetch the content of each page.

- Calculate Similarity and Cluster: Compute similarity scores and cluster the URLs based on content.

- Create Visualization: Generate an interactive network graph of the clustered URLs.

- Save Results: Save the processed data and results for future use.

Benefits for SEO and Automation

By incorporating Python into your SEO toolkit, you can significantly streamline content analysis, optimize internal linking, and provide valuable insights through efficient crawling and visualization. This automation saves time and resources, allowing SEO professionals to focus on strategic tasks and make more informed, data-driven decisions to improve website performance.

Content Analysis

Understanding how content is clustered can help in identifying gaps and opportunities for new content.

Internal Linking

Visualizing the relationships between pages aids in optimizing internal linking strategies.

Efficient Crawling

Automates the process of fetching and analyzing large amounts of page data, saving time and resources.

Actionable Insights

The visualization provides clear insights into content structure, helping in making informed decisions for SEO improvements.

By leveraging the power of Python, SEO professionals can enhance their strategies, improve website performance, and stay ahead in the competitive digital landscape.